Doubly Robust Methods in Causal Inference: A Powerful Approach to Estimating Treatment Effects

In the world of causal inference, we’re constantly seeking methods that can provide accurate and reliable estimates of treatment effects. One approach that has gained significant traction in recent years is the family of Doubly Robust (DR) methods. These methods offer a unique combination of flexibility and robustness that make them particularly appealing for a wide range of causal inference problems.

The Essence of Doubly Robust Methods

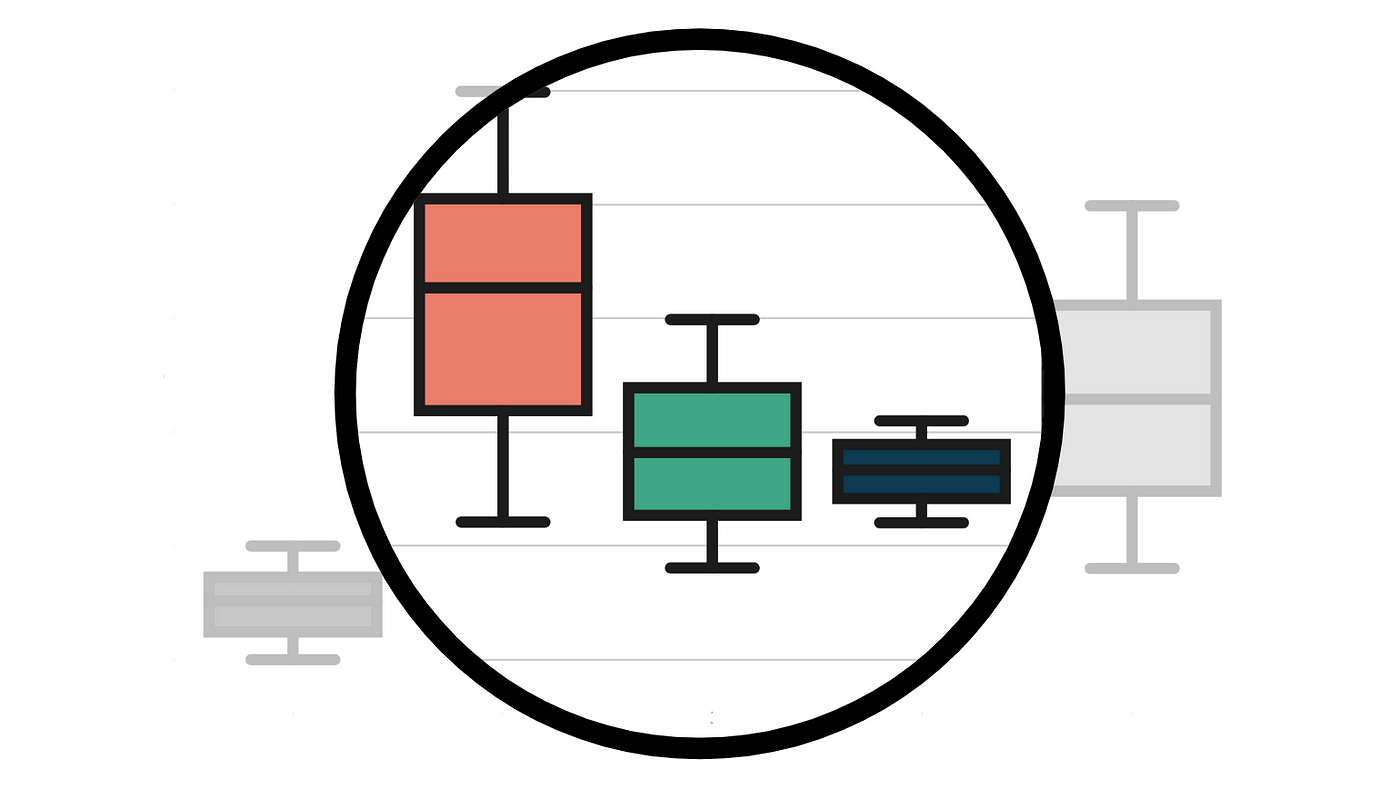

At their core, DR methods leverage two separate models:

- An outcome model: This predicts the outcome based on treatment and covariates.

- A treatment model: Also known as a propensity score model, this predicts the probability of treatment assignment based on covariates.

The “magic” of DR methods lies in their ability to provide consistent estimates if either of these models is correctly specified. This property provides a safeguard against model misspecification, which is a common concern in real-world data analysis.

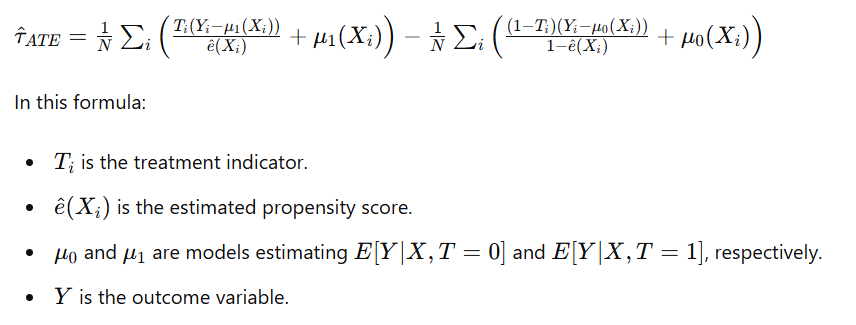

Here’s the formula for a basic DR estimator:

Implementing DR Methods with DoWhy and EconML

The DoWhy library, in conjunction with EconML, provides a convenient way to implement DR methods. Here’s an example of how to use a Linear DR-Learner. We’ll use logistic regression for propensity score modeling and an LGBM regressor for the outcome model.

estimate = model.estimate_effect(

identified_estimand=estimand,

method_name='backdoor.econml.dr.LinearDRLearner',

target_units='ate',

method_params={

'init_params': {

'model_propensity': LogisticRegression(),

'model_regression': LGBMRegressor(n_estimators=1000, max_depth=10)

},

'fit_params': {}

}

)Variants of DR-Learners

While the Linear DR-Learner is often a good starting point, EconML provides several variants that can be useful in different scenarios:

- DRLearner: This allows for non-linear final stage models, which can capture more complex treatment effects.

- SparseLinearDRLearner: This uses debiased lasso regression in the final stage, making it suitable for high-dimensional feature spaces or when the number of features approaches or exceeds the number of observations.

- ForestDRLearner: This employs a Causal Forest as the final stage model, which can be particularly effective for capturing complex, non-linear treatment effects and providing valid confidence intervals through bootstrapping.

Targeted Maximum Likelihood Estimation (TMLE)

Another powerful DR method worth mentioning is Targeted Maximum Likelihood Estimation (TMLE). TMLE is a semi-parametric method that allows us to use flexible machine learning algorithms while still maintaining desirable statistical properties for inference.

TMLE works by first estimating the outcome and treatment models, then applying a “targeting” step to optimize the bias-variance trade-off when estimating the causal effect. This targeting step involves fitting a parametric submodel to reduce residual bias in the estimate of the parameter of interest.

One of the key advantages of TMLE is that it can provide valid confidence intervals without requiring bootstrapping, which can be computationally intensive for large datasets. Additionally, TMLE has been shown to perform well under violations of the positivity assumption and in smaller sample sizes.

To implement TMLE in Python, you have a couple of options:

- IBM’s causallib: This library provides a comprehensive implementation of TMLE.

- zEpid: This epidemiology-focused library also includes TMLE implementation.

Here’s a basic example of how you might use TMLE with causallib:

from causallib.estimation import TMLE

tmle = TMLE(learner=RandomForestClassifier())

tmle.fit(X, t, y)

ate = tmle.estimate_population_outcome()Conclusion

Doubly Robust methods, including DR-Learners and TMLE, offer a powerful approach to causal inference. By leveraging both outcome and treatment models, they provide a safeguard against model misspecification and often yield more precise estimates of treatment effects.

When applying these methods, it’s crucial to consider the specifics of your data and research question. Start with simpler models like LinearDRLearner, and only move to more complex variants if necessary. Always validate your results and compare different approaches to ensure you’re getting the most accurate causal estimates possible.

Remember, in causal inference, our goal isn’t just to fit the data well, but to uncover true causal relationships. Doubly Robust methods are a valuable tool in this quest, helping us navigate the complex landscape of cause and effect with greater confidence and precision.