Unlocking the Power of Panel Data: A Beginner’s Guide with Python’s linearmodels

If you’re delving into data analysis, you’ve likely encountered cross-sectional data (data at one point in time) or time-series data (data over time for one entity). But what if you have data on multiple entities observed over multiple time periods? Welcome to the world of panel data!

Panel data is incredibly powerful because it allows us to control for unobserved factors and study dynamic relationships in ways that purely cross-sectional or time-series data cannot. In this blog post, we’ll embark on a journey through panel data analysis using the excellent Python library linearmodels, designed by @bashtage

We’ll use a classic example: analyzing factors affecting wages.

Our Data: The Wage Panel Dataset

Let’s start by understanding our data. We’re using a common panel dataset from econometrics, focusing on individual wages.

import pandas as pd

import statsmodels.api as sm

from linearmodels.panel import PanelOLS, PooledOLS, RandomEffects

from linearmodels.datasets import wage_panel

# Load the data

data = wage_panel.load()

# Convert year to a categorical type for proper handling as time fixed effects

year = pd.Categorical(data.year)

# Set the multi-index for panel data: 'nr' (individual ID) and 'year' (time period)

data = data.set_index(["nr", "year"])

# Assign the categorical year back to the DataFrame

data["year"] = year

# Let's peek at the data and its description

print(wage_panel.DESCR) # This would print details about the dataset

print(data.head())

Data Variables:

nr: Our entity identifier. This uniquely identifies each individual in our dataset.year: Our time identifier. This indicates the year of the observation for each individual.lwage: The dependent variable – the natural logarithm of hourly wage. Using the log allows us to interpret coefficients as approximate percentage changes in wages.black: A dummy variable (1 if Black, 0 otherwise).hisp: A dummy variable (1 if Hispanic, 0 otherwise).exper: Years of work experience.expersq: Experience squared (used to capture non-linear effects of experience on wages).married: A dummy variable (1 if married, 0 otherwise).educ: Years of education.union: A dummy variable (1 if a member of a union, 0 otherwise).- Other variables like

occupationare also present but not used in these examples.

Key Data Preparation for linearmodels:

The step data = data.set_index([“nr”, “year”]) is crucial. It tells linearmodels that nr identifies unique individuals and year identifies the time periods for those individuals. This creates a “MultiIndex” which is how panel data is typically structured in Pandas.

Understanding Panel Data Models: A Spectrum of Assumptions

The core challenge in panel data is how to deal with those unobserved “Individual Traits” (alpha_i) that affect our dependent variable (wages) but aren’t explicitly measured. Different models make different assumptions about these traits:

- Pooled OLS: Ignores alpha_i (or assumes it’s harmless).

- Random Effects (RE): Acknowledges alpha_i but assumes it’s random and unrelated to our other variables.

- Fixed Effects (FE): Directly accounts for alpha_i by removing its influence, even if it’s related to our other variables.

Let’s explore each one!

Model 1: Pooled OLS – The “Simple” Approach

The Pooled OLS (Ordinary Least Squares) model is the simplest way to analyze panel data. It essentially stacks all your observations (all individuals across all years) into one large dataset and runs a standard OLS regression, as if they were independent cross-sectional observations.

# Independent variables for Pooled OLS

exog_vars_pooled = ["black", "hisp", "exper", "expersq", "married", "educ", "union", "year"]

exog_pooled = sm.add_constant(data[exog_vars_pooled])

# Instantiate and fit the PooledOLS model

mod_pooled = PooledOLS(data.lwage, exog_pooled)

pooled_res = mod_pooled.fit(cov_type='unadjusted') # Unadjusted standard errors for simplicity here

print(pooled_res)

PooledOLS Estimation Summary (Key Highlights):

Dep. Variable: lwage: Our target variable.Estimator: PooledOLS: Confirms the model type.R-squared: 0.1893: This means about 18.93% of the total variation inlwageis explained by our chosen independent variables.No. Observations: 4360,Entities: 545,Time periods: 8: Shows the dimensions of our panel. Each of 545 individuals has 8 observations.F-statistic: 72.459,P-value: 0.0000: The overall model is highly statistically significant, meaning our independent variables collectively explain a significant portion of wage variation.

Parameter Estimates (Pooled OLS Interpretation):

| Parameter | Estimate | P-value | Interpretation (approx. % change in wage) | Significance |

const | 0.0921 | 0.2396 | Baseline log wage for a non-black, non-Hispanic, unmarried, non-union individual with 0 experience/education in base year (not significant) | Not Sig. |

black | -0.1392 | 0.0000 | 13.92% lower wage for Black individuals (highly significant) | Sig. |

hisp | 0.0160 | 0.4412 | 1.60% higher wage for Hispanic individuals (not significant) | Not Sig. |

exper | 0.0672 | 0.0000 | Wages increase with experience… | Sig. |

expersq | -0.0024 | 0.0033 | …but at a diminishing rate (inverted U-shape). Both significant. | Sig. |

married | 0.1083 | 0.0000 | 10.83% higher wage for married individuals (highly significant) | Sig. |

educ | 0.0913 | 0.0000 | 9.13% higher wage for each additional year of education (highly significant) | Sig. |

union | 0.1825 | 0.0000 | 18.25% higher wage for union members (highly significant) | Sig. |

year.198X | (various) | (various) | Wages tend to be higher in later years compared to the base year (e.g., year.1987 is 17.38% higher than 1980). | Mixed Sig. |

Pooled OLS: The Assumption Problem

The biggest weakness of Pooled OLS in panel data is its core assumption: that the unobserved “Individual Trait” (alpha_i) doesn’t exist, or if it does, it’s not correlated with any of our explanatory variables. This is rarely true in real-world scenarios.

For example, if naturally more ambitious people (high \alpha_i) also tend to get more education, then Pooled OLS will falsely attribute some of the “ambition’s” wage effect to education, leading to biased coefficients. It essentially suffers from omitted variable bias if alpha_i is significant and correlated with X.

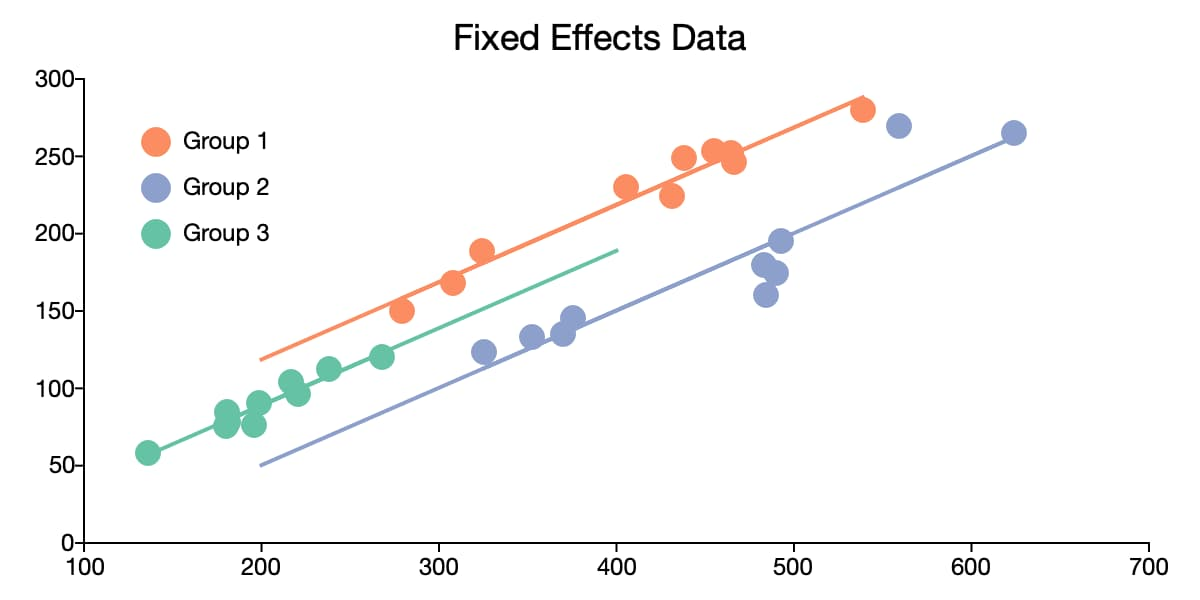

Model 2: Fixed Effects (FE) – Controlling for Unseen Individual Traits

The Fixed Effects model (what we will explore as PanelOLS(entity_effects=True)) is designed to address the “unseen individual trait” problem directly. It does this by essentially creating a unique intercept for each individual, capturing all their time-invariant unobserved characteristics.

# Independent variables for Fixed Effects (excluding 'black' and 'hisp' for now)

# We usually exclude time-invariant variables in FE since their effects are absorbed

exog_vars_fe = ["exper", "expersq", "married", "union"] # No 'educ' here for comparison later

exog_fe = sm.add_constant(data[exog_vars_fe])

# Instantiate and fit the PanelOLS with entity_effects=True

mod_fe = PanelOLS(data.lwage, exog_fe, entity_effects=True)

fe_res = mod_fe.fit(cov_type='unadjusted')

print(fe_res)

Fixed Effects Estimation Summary (Key Highlights):

Estimator: PanelOLS: The general panel estimator.R-squared (Within): 0.1365: This is the most relevant R-squared for FE. It shows that 13.65% of the within-individual variation inlwageis explained.F-test for Poolability: 9.3360,P-value: 0.0000: This is a crucial test.- Null Hypothesis: There are no significant unobserved individual effects (alpha_i are all zero or the same).

- Our Result: The P-value of 0.0000 strongly rejects the null. This means the unobserved individual effects are significant, and a Fixed Effects model is necessary over a simple Pooled OLS.

Parameter Estimates (Fixed Effects Interpretation):

| Parameter | Estimate | P-value | Interpretation (approx. % change in wage) | Significance |

const | 1.3953 | 0.0000 | Average baseline log wage (intercept) across individuals, after accounting for all variables and individual fixed effects. | Sig. |

exper | 0.1168 | 0.0000 | Wages initially increase with experience… | Sig. |

expersq | -0.0043 | 0.0000 | …but at a diminishing rate. The combined effect is an inverted U-shape. These estimates are based on how an individual’s wage changes as their experience changes. | Sig. |

married | 0.0453 | 0.0134 | 4.53% higher wage when an individual becomes married (or for the periods they are married compared to when they are not), holding other factors constant. This is a powerful “within-person” effect. | Sig. |

union | 0.0821 | 0.0000 | 8.21% higher wage when an individual becomes a union member (or for the periods they are a member compared to when they are not). Another strong “within-person” effect. | Sig. |

Fixed Effects: The Strength for Causality

- How it Works: Fixed Effects handles the unobserved “Individual Trait” (alpha_i) by “demeaning” the data for each individual. This means it subtracts each individual’s average over time from their observations. This effectively removes any time-invariant unobserved traits.

- Reduced Bias: Because it accounts for alpha_i, Fixed Effects is generally less prone to omitted variable bias if alpha_i is correlated with your other variables. This makes it a stronger candidate for causal inference.

- The Limitation: You cannot estimate the effect of variables that do not change over time for an individual (e.g.,

black,hisp, orgender). Their influence is absorbed into the individual’s fixed effect. If you includeeducin this model, it’s interpreting the effect of changes ineduc(e.g., someone going back to school), not the overall effect of having a certain education level.

Model 3: Random Effects (RE) – A Compromise

The Random Effects model is an intermediate approach. It acknowledges the existence of the unobserved “Individual Trait” (alpha_i) but treats it as a random component of the error term, rather than something to be directly removed.

Python

# Independent variables for Random Effects (same as Pooled OLS to allow comparison)

exog_vars_re = ["black", "hisp", "exper", "expersq", "married", "educ", "union", "year"]

exog_re = sm.add_constant(data[exog_vars_re])

# Instantiate and fit the RandomEffects model

mod_re = RandomEffects(data.lwage, exog_re)

re_res = mod_re.fit(cov_type='unadjusted')

print(re_res)

# View the variance decomposition

print("\nVariance Decomposition:")

print(re_res.variance_decomposition)

Random Effects Estimation Summary (Key Highlights):

Estimator: RandomEffects: Confirms the model type.Log-likelihood: -1622.5: RE models maximize a different likelihood function than OLS/FE.Variance Decomposition:This is a unique and important output for RE.Effects: 0.106946: Estimated variance of the unobserved individual effect (sigma_alpha2).Residual: 0.123324: Estimated variance of the idiosyncratic error term (sigma_epsilon2).Percent due to Effects: 0.464438: This tells us that approximately 46.44% of the total unexplained variation inlwageis due to these unobserved, time-invariant individual characteristics (alpha_i). This is a substantial amount!

Parameter Estimates (Random Effects Interpretation):

| Parameter | Estimate | P-value | Interpretation (approx. % change in wage) | Significance |

const | 0.0234 | 0.8771 | (Not significant) | Not Sig. |

black | -0.1394 | 0.0037 | 13.94% lower wage for Black individuals (significant) | Sig. |

hisp | 0.0217 | 0.6116 | (Not significant) | Not Sig. |

exper | 0.1058 | 0.0000 | Wages increase with experience… | Sig. |

expersq | -0.0047 | 0.0000 | …at a diminishing rate. Both significant. | Sig. |

married | 0.0638 | 0.0001 | 6.38% higher wage for married individuals (highly significant) | Sig. |

educ | 0.0919 | 0.0000 | 9.19% higher wage for each additional year of education (highly significant) | Sig. |

union | 0.1059 | 0.0000 | 10.59% higher wage for union members (highly significant) | Sig. |

year.198X | (various) | (various) | (Mostly not significant or weakly significant, except for year.1987 being borderline) | Mixed Sig. |

Random Effects: The Assumption and The Trade-off

- How it Works: Random Effects uses a technique called Generalized Least Squares (GLS). It essentially performs a “partial demeaning” of the data. It’s like it tries to find the optimal balance between Pooled OLS and Fixed Effects.

- The Critical Assumption: RE assumes that the unobserved “Individual Trait” (alpha_i) is uncorrelated with your independent variables. If this assumption holds, RE is more efficient (gives more precise estimates with smaller standard errors) than Fixed Effects because it uses both within-individual and between-individual variation in the data.

- The Trade-off: If the assumption of uncorrelated alpha_i is violated (i.e., if your unobserved individual traits are correlated with

educ,married, etc.), then RE estimates will be biased and inconsistent, just like Pooled OLS.

Comparing the Coefficient Estimates Across Models: What Do the Numbers Tell Us?

This is where the magic happens! By comparing the estimated coefficients for the same variable across different models, we can gain insights into the underlying assumptions and potential biases. Let’s put some key coefficient estimates side-by-side to highlight these differences.

Our Focus Variables: We’ll primarily look at married and union, as these are variables that can change over time for an individual, allowing them to appear in all three models. We’ll also briefly discuss educ and black.

| Variable | Pooled OLS (Estimate) | Random Effects (Estimate) | Fixed Effects (Estimate) |

married | 0.1083 | 0.0638 | 0.0453 |

union | 0.1825 | 0.1059 | 0.0821 |

educ | 0.0913 | 0.0919 | (Cannot estimate directly) |

black | -0.1392 | -0.1394 | (Cannot estimate directly) |

Let’s break down what these differences in numbers imply, using our “unseen individual trait” (αi) idea.

1. The married Coefficient: Why Does it Shrink?

- Pooled OLS: 0.1083 (Largest)

- Interpretation: A married person earns approximately 10.83% more than an unmarried person, on average, in the entire dataset.

- The Implicit Story (Potentially Flawed): Pooled OLS doesn’t “know” about those unseen individual traits (αi). So, it looks at all married people and all unmarried people. It sees that married people, on average, earn more.

- The Problem: What if people who get married are also, on average, more stable, more motivated, or have other “unseen traits” (αi) that make them higher earners regardless of their marital status? Pooled OLS doesn’t separate this. It essentially attributes all of the higher wage to “being married,” even if some of it is actually due to those unseen, positive αi traits that predisposed them to both marriage and higher wages. This leads to an overestimation (upward bias) of the

marriedeffect.

- Random Effects: 0.0638 (Middle Ground)

- Interpretation: Being married is associated with approximately 6.38% higher wages.

- The Story: Random Effects tries to account for the existence of unseen individual traits (αi). It attempts to “filter out” some of that general, unobserved individual difference that might make certain people earn more. It’s partially separating the αi from the actual effect of

married. - Why it’s smaller than Pooled OLS: It’s removing some of the upward bias caused by those unseen traits correlated with both getting married and earning more. It’s a “compromise” that doesn’t completely eliminate αi but tries to model it as a random component.

- Fixed Effects: 0.0453 (Smallest)

- Interpretation: When the same individual changes from being unmarried to married, their wage increases by approximately 4.53%.

- The Power of Fixed Effects: This model literally “strips out” all the unseen, unchanging individual traits (αi). It focuses only on the changes within the same person. If a person gets married and their wage goes up, it’s looking at that specific change.

- Why it’s the smallest (and often preferred for causality): This suggests that a significant portion of the “marriage premium” seen in Pooled OLS was actually due to unobserved, positive characteristics of people who tend to get married (their αi), rather than marriage itself having such a large independent effect. By focusing on within-person changes, FE gives us a purer estimate, less susceptible to that “omitted variable bias.”

2. The union Coefficient: A Similar Pattern

- Pooled OLS: 0.1825 (Largest): Union members earn 18.25% more. Again, this could be biased upwards if people with certain unobserved traits (αi) are both more likely to join a union and earn more.

- Random Effects: 0.1059 (Middle Ground): Being a union member is associated with 10.59% higher wages. It tries to account for some of the shared individual unobservables.

- Fixed Effects: 0.0821 (Smallest): When the same individual joins a union, their wage increases by 8.21%. This is a powerful “within-person” effect, suggesting that part of the union wage premium observed in simpler models was due to stable, unobserved qualities of those who choose to join unions.

3. educ and black: Why They Disappear in Fixed Effects

- Pooled OLS:

educ(0.0913),black(-0.1392) - Random Effects:

educ(0.0919),black(-0.1394) - Fixed Effects: (Cannot estimate directly)

- The Reason:

educ(years of education) andblack(racial identity) are often considered time-invariant for an individual within the typical timeframe of a panel dataset (e.g., someone’s race doesn’t change, and their formal education rarely changes significantly year-to-year in an 8-year panel). - Fixed Effects’ Limitation: Remember, Fixed Effects works by looking at changes within each person. If a variable doesn’t change for a person over time, Fixed Effects has no way to separate its effect from that person’s unique “unseen trait” (αi). The effect of

blackoreduc(if it’s constant) would be entirely “swallowed up” by the individual’s fixed effect. - Pooled OLS and Random Effects’ Advantage: Since they don’t completely remove αi in the same way, they can provide estimates for these time-invariant variables. However, these estimates will be more susceptible to bias if those time-invariant variables are correlated with the unobserved αi. For instance, if people from certain backgrounds (related to αi) tend to have less education and lower wages, the

educcoefficient in Pooled OLS or RE might be biased.

In Essence: The Story the Numbers Tell

The general pattern here is common:

- Pooled OLS gives coefficients that are often the largest (in absolute value) because it bundles the true effect of the variable with the effect of any unseen, unchanging individual traits that are correlated with both the variable and the wage.

- Random Effects tries to untangle this a bit, resulting in coefficients that are often smaller than Pooled OLS but still potentially biased if the individual traits are strongly correlated with your variables.

- Fixed Effects gives the smallest coefficients (for time-varying variables) because it meticulously strips away the influence of those unseen, unchanging individual traits. This often leads to the most credible estimate of the variable’s causal impact on wage within the same person.

When you see a coefficient shrink significantly from Pooled OLS to Fixed Effects (as we do for married and union), it’s a strong indicator that those “unseen individual traits” play a substantial role in explaining wages and were likely biasing your simpler Pooled OLS results upwards. This is why the Fixed Effects model is often preferred when studying the causal impact of time-varying variables in panel data.

Which Model to Choose? The Hausman Test (Next Steps)

The crucial decision between Random Effects and Fixed Effects often comes down to the Hausman Test.

- The Question: Is the “Individual Trait” (alpha_i) correlated with our independent variables (

educ,married,exper, etc.)?- If NO (uncorrelated): Random Effects is preferred (it’s more efficient).

- If YES (correlated): Fixed Effects is preferred (it’s more robust and consistent, even if less efficient).

Given that your Percent due to Effects in the RE model was high (46.44%), it strongly suggests that those unobserved individual traits are significant. In practice, if you suspect these unobserved traits might be correlated with your independent variables (e.g., smarter people get more education and higher wages), Fixed Effects is often the safer and more widely accepted choice for drawing causal inferences, even if you lose the ability to estimate time-invariant variables directly.

Final Thoughts on Causality

Can these models claim causality?

- Pooled OLS: Very weak for causality due to probable omitted variable bias.

- Random Effects: Better than Pooled OLS, but still vulnerable to bias if the critical assumption (uncorrelated alpha_i with regressors) is violated.

- Fixed Effects: The strongest of the three for causality, as it effectively controls for all time-invariant unobserved factors. However, it doesn’t control for unobserved factors that change over time or for issues like reverse causality.

For truly robust causal claims, researchers often move beyond these basic panel models to more advanced techniques like Instrumental Variables (IV) or Difference-in-Differences (DiD) if specific endogeneity or confounding issues persist.

You’ve taken a fantastic first step into panel data analysis. Understanding these foundational models is key to unlocking deeper insights from your longitudinal datasets! Keep exploring and questioning your data!